Tag: Ableton Live

NIME 2020 – ScreenPlay: A Topic-Theory-Inspired Interactive System

Just over a year ago I was scheduled to demonstrate my research at NIME 2019, which took place at the Federal University of Rio Grande do Sul (UFRGS) in Porto Alegre, Brazil. My journey there comprised three flights (Manchester to Paris, Paris to Rio de Janeiro, and, finally, Rio de Janeiro to Porto Alegre), with a total time of approximately 18 hours. Unfortunately, immediately prior to boarding my flight to Paris, I received a text from the airline telling me that my flight from Paris to Rio de Janeiro had been cancelled. Assuming the provision of a suitable alternative means of continuing my journey, I decided to fly to Paris anyway. Upon arrival at Charles de Gaulle airport, however, I was informed that, in order to complete my journey, I would be required to fly to Lima in Peru the next morning before catching a connecting flight to Porto Alegre; a change that added an addition 12 hours to my total journey time and would see me miss the first day of the conference. Accordingly, I instead decided to return to Manchester the next day, a decision which meant I would miss the conference.

Thankfully, I was able to showcase my research later that summer at Audio Mostly at the University of Nottingham and was again invited to demonstrate my research at NIME 2020, which was scheduled to take place at the Royal Birmingham Conservatoire in the UK. When the COVID-19 pandemic struck, however, it looked like my attempts to attend NIME would again be thwarted. The response from the conference organisers, however, was amazing and, in the face of adversity, they ran the conference remotely with great success. I was required to provide a real time demonstration of my research via Zoom, but also produce a demonstration video, which can be seen below. Despite less than ideal circumstances, my experience at NIME was great and I hope to attend again in the future.

gtm.tempoctrl

I recently discovered that, while it is possible to map the Max for Live LFO device to Ableton Live’s global tempo, doing so results in no audile change to the tempo despite the number changing visually. As such, I made gtm.tempoctrl, which affords a variety of ways in which to automate global tempo transitions in Ableton Live.

The device can be downloaded here.

Enjoy!

ScreenPlay: A Topic-Theory-Inspired Interactive System in Organised Sound 25(1)

I have recently published an article in Organised Sound Volume 25 Issue 1, Computation in the Sonic Arts, entitled ScreenPlay: A Topic-Theory-Inspired Interactive System, which can be accessed for free here. The paper is the culmination of a research project that I began in 2013 for my PhD and have continued working on since the completion of my PhD in 2017. The abstract for the paper can be read below:

ScreenPlay is a unique interactive computer music system (ICMS) that draws upon various computational styles from within the field of human–computer interaction (HCI) in music, allowing it to transcend the socially contextual boundaries that separate different approaches to ICMS design and implementation, as well as the overarching spheres of experimental/academic and popular electronic musics. A key aspect of ScreenPlay’s design in achieving this is the novel inclusion of topic theory, which also enables ScreenPlay to bridge a gap spanning both time and genre between Classical/Romantic era music and contemporary electronic music; providing new and creative insights into the subject of topic theory and its potential for reappropriation within the sonic arts.

More information on ScreenPlay in addition to a demo video and free download link can be found elsewhere in my blog.

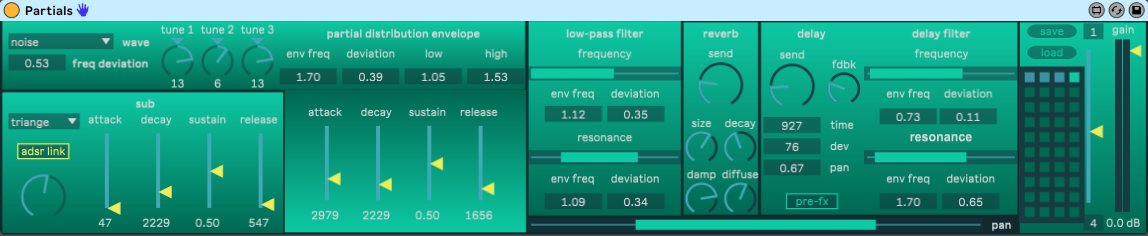

Updated Partials Max for Live Instrument

Newly updated Max for Live Instrument with improved Ableton Live and Push integration. Download also includes Max patcher and Max collective (not updated). Download for free here!

Read more about Partials here.

ScreenPlay 1.1 Available to Download

ScreenPlay version 1.1 is now available to download for free here!

Download includes suite of Max for Live MIDI Devices, Ableton Live Audio Effect Rack, Liine Lemur GUI templates.

Barcelona Metro

Originally composed for the Martini Elettrico festival hosted by Conservatorio di Musica Giovan Battista Martini of the University of Bologna in April 2018, the piece Barcelona Metro is founded upon a recording captured many years ago of a busker in a Barcelona Metro station playing a rendition of “Every Breath You Take” by The Police. The sample has been heavily processed using the Iota granular looping Max for Live instrument and can be heard throughout the piece; most notably in the introduction and breakdown sections. In addition to this, a multitude of LFOs employing various waveforms have been used to control a vast array of parameters, from simple panning to the timbre of the kick drum, the glitchy beat repeat effect applied to the drums, and the note duration of the arpeggiated lead. There is also an arpeggiated bass that slowly moves in and out of synchronization with a second lead that, for the most part, mimics the notes played in the bass only higher in register. The accumulation of these stochastic processes results in a piece that is, at times, chaotic and unpredictable, whilst simultaneously retaining many stylistic traits more commonly associated with popular electronic music.

This is the first piece of music I have finished in quite some time; I hope you enjoy it.

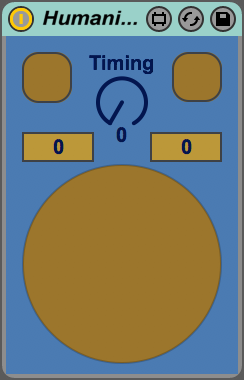

Humaniser Max for Live MIDI Device

Humaniser is a simple Max for Live MIDI device that serves to alter both the velocity and timing of notes within a clip. The possible range for velocity values can be determined either by the minimum/maximum note velocities currently present in the clip at the time humanisation takes place, or specified manually. It is also possible to lock/unlock the timing position of the very first note in the clip, so as to avoid the note being shifted back in time causing it to start before the beginning of the clip.

ScreenPlay Demo

Between 2013 and 2016 I was working primarily towards my PhD thesis at the University of Salford; the focus of which was human-computer interaction (HCI) in music. Since then, however, I have struggled to find the time to post about the results of my research over the course of those 3 years amidst finding and starting a new job, and ongoing teaching responsibilities.

The focal point of my research has been the design and development of a unique and innovative interactive computer music system (ICMS), ScreenPlay. There are 3 main approaches to the design of ICMSs: sequenced, generative, and transformative. Sequenced systems are usually tailored towards a lone user and afford the opportunity to orchestrate/arrange a predetermined composition from constituent parts/loops. These systems are excellent for novice users due to the coherency of their musical output and often simple and engaging user interfaces (UIs). However, they are often devoid of any meaningful influence over the generated musical output by the computer. Incredibox is an excellent example of a sequenced ICMS.

Generative systems rely on an underlying algorithmic framework to generate musical responses to the input of the user. Better suited to supporting multiple users simultaneously, the musical output of generative systems is often stylistically ambient, and there can be little discernible connection between the control actions/gestures of the user(s) and the resulting musical output – thus limiting the scope for user(s) to exert a tangible influence over the music. As a result, generative systems can struggle to engage users with a higher level of musical proficiency. Examples of generative systems include NodeBeat and Brian Eno’s Bloom and Reflection apps.

In most instances transformative systems are designed to respond to the incoming audio signal from a live instrument, and transform the sound of the instrument through various means of manipulation. Many early ICMSs were transformative in nature, with the process of design and implementation often being explicitly aligned with the composition and performance of a specific musical work. As a result, such systems are known as “score followers”; Pluton by Philippe Manoury being a prime example. The reliance of transformative systems on a level of instrumental proficiency means that contemporary examples are scarce, in particular in the context of electronic music.

A common trait among each of the three separate approaches to ICMS design outlined above is that the resulting systems often prioritise the affordance of influential control to the user(s) over one or two distinct musical parameters/characteristics, while at the same time ignoring the immense creative possibilities offered up by the multitude of other musical parameters/characteristics available. The systems mentioned above are just a few examples of ICMSs that each exhibit the characteristics of only one of the three overarching approaches to ICMS design: sequenced, generative and transformative. A common hindrance to the vast majority of ICMSs, ScreenPlay seeks to combat this exclusivity of focus through the encapsulation and evolution of the fundamental principles behind the three system design models in what is a novel approach to ICMS design, along with the introduction of new and unique concepts to HCI in music in the form of a bespoke topic-theory-inspired transformative algorithm and its application alongside Markovian generative algorithms in breaking routine in collaborative improvisatory performance and generating new musical ideas in composition, as well as providing new and additional dimensions of expressivity in both composition and performance. The multifunctionality of the system, which allows it to exist as both a multi-user-and-computer interactive performance system and single-user-and-computer studio compositional tool, including the affordance of dedicated GUIs to each individual involved in collaborative, improvisatory performance when in multi mode and the technicality of hosting up to sixteen separate users through a single instance of Ableton Live in order to achieve this, is another of ScreenPlay’s unique design features. The primary goal throughout the ScreenPlay‘s development cycle has been that the convergence of all these different facets of its design should culminate in the establishment of an ICMS that excels in providing users/performers of all levels of musical proficiency and experience with ICMSs an intuitive, engaging and complete interactive musical experience.

As previously mentioned, one of the most unprecedented inclusions in the design of ScreenPlay within the context of HCI in music is the topic-theory-inspired transformative algorithm. Topic theory, which was particularly prevalent during the Classical and Romantic periods, is a compositional tool whereby the composer employs specific musical identifiers – known as “topics” – in order to evoke certain emotional responses and cultural/contextual associations from the audience. In ScreenPlay the concept of topic theory is implemented in reverse, with textual descriptors presented to the user(s) via the GUI being used to describe the transformative audible effects had upon the musical output of the system by a variety of “topical oppositions”.

In total ScreenPlay affords the user(s) a choice of four “topical oppositions”, each of which is presented on the GUI as two opposing effectors at opposite ends of a horizontal slider; the position of which between the two poles dictates the transformative effect on the musical output of the system had by each of the oppositions. The four oppositions are “joy-lament”, “light-dark”, “open-close”, and “stability-destruction”, the first of which acts by altering the melodic and rhythmic contour of a musical phrase/loop to imbue the musical output of the system with a more joyous or melancholic sentiment respectively. The three remaining oppositions serve to transform the textural/timbral characteristics of the music in various ways. In order to achieve the desired effect as indicated by the position of the corresponding slider at the moment the transformation is triggered by the user, the algorithm which underpins the “joy-lament” “topical opposition” performs a number of probability-based calculations, the weightings of which change in accordance with the position of the slider, and, as a result, alter the relative transformative effect. These calculations include the application of specific intervals between successive pitches in a melodic line – with certain intervals being favoured more heavily depending on the position of the slider; the increase/decrease in the number of notes within a melody, note duration and speed of movement between notes; and the overall directional shape of the melody – whether favouring upward or downward movement. The respective positions of the sliders for the three textural/timbral “topical opposition” transformations – “light-dark”, “open-close” and “stability-destruction” – work by informing the parameter settings of a number of macro controls on Ableton Effect Racks, each of which is mapped to a multiple of parameters across numerous effects.

ScreenPlay‘s GUI is currently designed as a TouchOSC template, with the playing surface mimicking that of an Ableton Push. When in multi mode, each user (up to sixteen in total) is able to interact with the system through a single Ableton Live set via individual GUIs, which grant them influential control over a specific part within the arrangement of the musical output of the system. In single mode, the user can control up to sixteen distinct parts from a single GUI, with the interface updating in real time to display the status of the currently selected part. In order to achieve this, large swathes of the three Max for Live devices that constitute the system as a whole are dedicated to facilitating the two-way transmission of OSC messages between Ableton Live and TouchOSC. The assignment of pitches to the “pads” on the playing surface of the GUI, depending on the user-defined key signature/scale selection, is also currently undertaken by one of the three Max for Live devices. As such, I plan to develop a dedicated GUI in Lemur, which can process most of these tasks internally, thus reducing the demand on CPU of the central computer system, and will also facilitate the implementation of a wired connection between the GUI and the central system – if so desired by the user(s)/performer(s) – thus negating the impact of weak/fluctuating WiFi on the reliability and fluidity of the interactive experience. While it is already possible to bypass the playing surface displayed on the GUI and use any MIDI controller with which to play and record notes into the system, it is still necessary use the TouchOSC GUI to control the generative and transformative algorithms. Providing the option to bypass the GUI entirely by affording control over these aspects of the system directly through the Max for Live devices themselves is also an intended development of ScreenPlay.

ScreenPlay will undoubtedly be made publicly available at some point or another, although I am not yet sure when or in what form. As already pointed out, there are definite improvements to the design and functionality of the system that can be made – some more pressing than others – and I would like to take the time to refine some of these aspects of the system before releasing it.

Daft Punk | Tron: Legacy (End Titles)

This is a studio performance of Tron: Legacy (End Titles) by Daft Punk that I recorded all the way back at the end of 2015, but, due to being preoccupied with other things in the time since then, have only just gotten round to uploading. In it I’m using a MicroBrute for the bass, Volca Beats for the drums, a combination of six different patches on the Virus for the two lead sounds, and an Ableton Push and APC40 for controllers.